Deep Learning, NLP, and Representations

Offer to readers of "Habrahabr" translation of the post "Deep Learning, NLP, and Representations" cool Christopher Alaha. Illustration from the same place.

In recent years, methods that use deep learning neural networks (deep neural networks), took a leading role in pattern recognition. Thanks to them, the bar for quality methods in computer vision have risen significantly. In the same direction moves and speech recognition.

Results results, but why they are so cool solve the problem?

The post illuminated some impressive results using the deep neural networks in natural language processing (Natural Language Processing; NLP). Thus I hope to explain one of the answers to the question of why deep neural networks work.

the

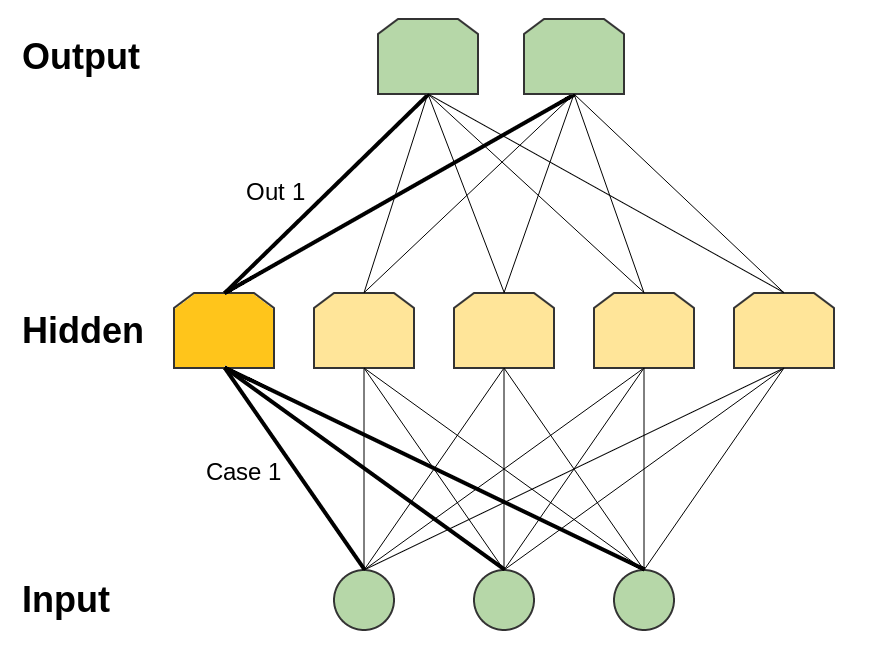

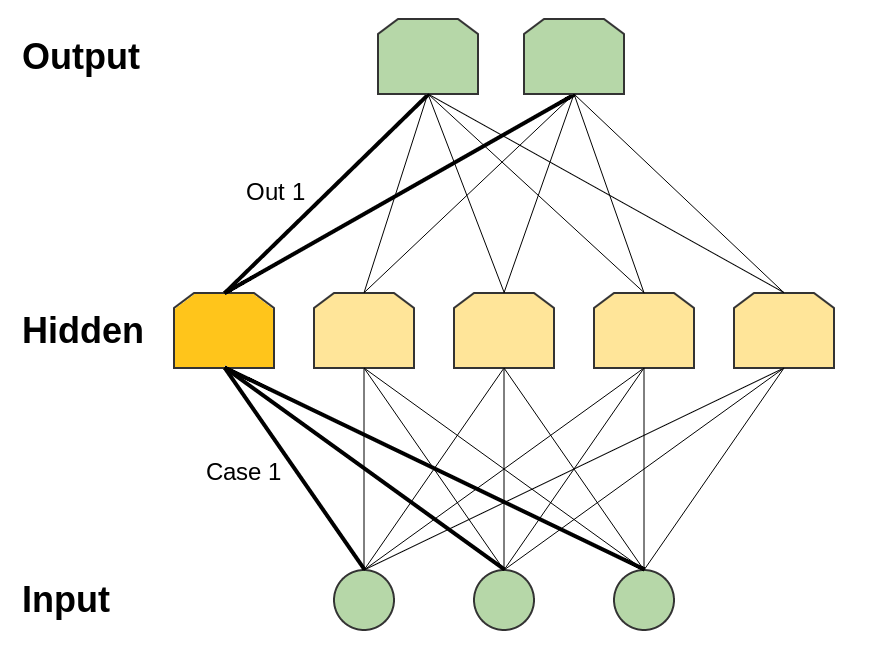

A neural network with a hidden layer is universal: for a sufficiently large number of hidden nodes, it can build an approximation of any function. This is often quoted (and often misunderstood and applied) theorem.

This is true because the hidden layer can be simply used as a "lookup table".

For simplicity, consider a perceptron. This is a very simple neuron that is triggered if the value exceeds the threshold value, and fails if not. The perceptron with binary inputs and binary outputs (i.e. 0 or 1). The number of variants in input value is limited. Each of them can be mapped to a neuron in the hidden layer, which only works for a given input.

Analysis of "conditions" for each individual entry will require of hidden neurons (when n of data). In fact, usually it's not so bad. Can be "terms" that match multiple input values, and can be "overlapping each other" "terms" that reach the correct inputs on your crossing.

of hidden neurons (when n of data). In fact, usually it's not so bad. Can be "terms" that match multiple input values, and can be "overlapping each other" "terms" that reach the correct inputs on your crossing.

Then can use a connection between this neuron and neurons at the output to set the final value for this particular case.

Versatility have not only the perceptrons. Network sigmoidal in neurons (and other functions of activation) is also universal: given sufficient number of hidden neurons, they can build arbitrarily accurate approximation of any continuous function. To demonstrate this much more difficult, as you cannot just take and isolate the inputs from each other.

Therefore, it appears that a neural network with one hidden layer is in fact universal. However, there is nothing impressive or amazing. The fact that the model can work as a cross-reference table — not the strongest argument in favor of neural networks. It simply means that the model is in principle able to cope with the task. Under the universality means only that the network can adapt to any sample, but this does not mean that it is able to adequately interpolate the solution to work with new data.

No, versatility still does not explain why neural networks work so well. The correct answer is somewhat deeper. To understand this, first consider a few concrete results.

the

Begin with a particularly interesting sub-area deep learning — vector representations of words (word embeddings). In my opinion, now vector representation is one of the hottest research topics in deep learning, although they were first proposed by Bengio, et al. more than 10 years ago.

Vector representation was first proposed in the works of Bengio et al, 2001 and Bengio et al, 2003 a few years before the resurrection of deep learning in 2006, when the neural network was not yet in fashion. The idea of distributed representations and as such even older (see, for example, Hinton 1986).

In addition, I think that this is one of those tasks which are best formed an intuitive understanding of why deep learning is so effective.

Vector representation of the word — a parameterized function that displays words from a natural language to vectors of large dimension (say, from 200 to 500 dimensions). For example, it might look like this:

— a parameterized function that displays words from a natural language to vectors of large dimension (say, from 200 to 500 dimensions). For example, it might look like this:

(Usually, this function is specified by a lookup table defined by the matrix in which each word corresponds to the string

in which each word corresponds to the string  ).

).

W is initialized with random vectors for each word. She will study to give meaningful values for the solution of a problem.

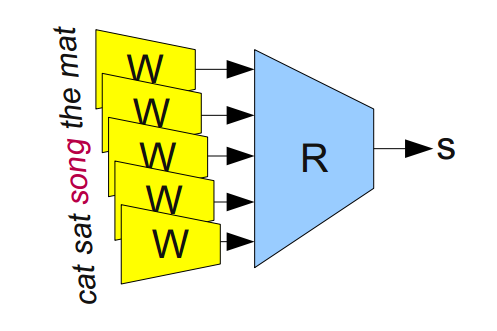

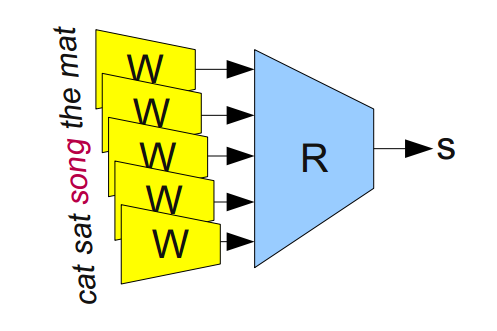

For example, we could train the network on the definition of "correct" or 5-grams (a sequence of five words, e.g. 'the cat sat on the mat'). 5-grams can be easily derived from Wikipedia, and then half of them "spoil", replacing each one of the words at random (for example, 'the cat sat the mat song'), as this almost always makes a 5-gram senseless.

a Modular network to determine "correct" or 5-grams (Bottou (2011)).

The model that we teach, skip every word of 5-grams using W to obtain a release of their vector representations, and submit them to the input of another module, R, which tries to predict the "correct" 5-gram or not. Want to make it:

To predict these values accurately, the network needs to choose a good settings for W and R.

However, this task is boring. Likely, the solution will help to find in the texts of grammatical errors or something in this spirit. But what's really valuable is obtained W.

(Actually, the whole point of the task in teaching W. We could consider solving other problems; for example, one of the most common next word prediction in a sentence. But that's not our goal. In the remainder of this section we will talk about many of the results of the vector representations of words and not be distracted by the lighting difference between the approaches).

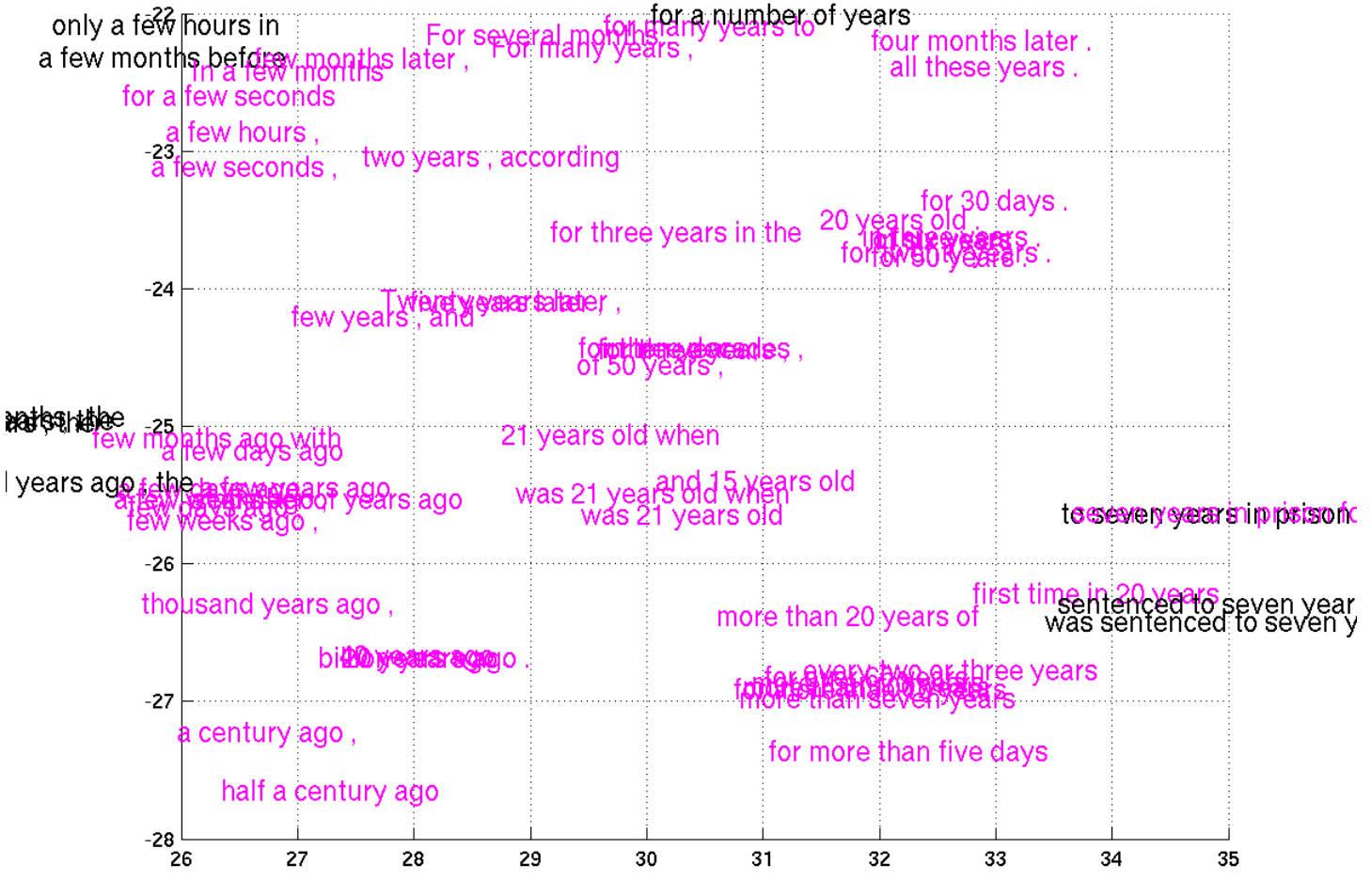

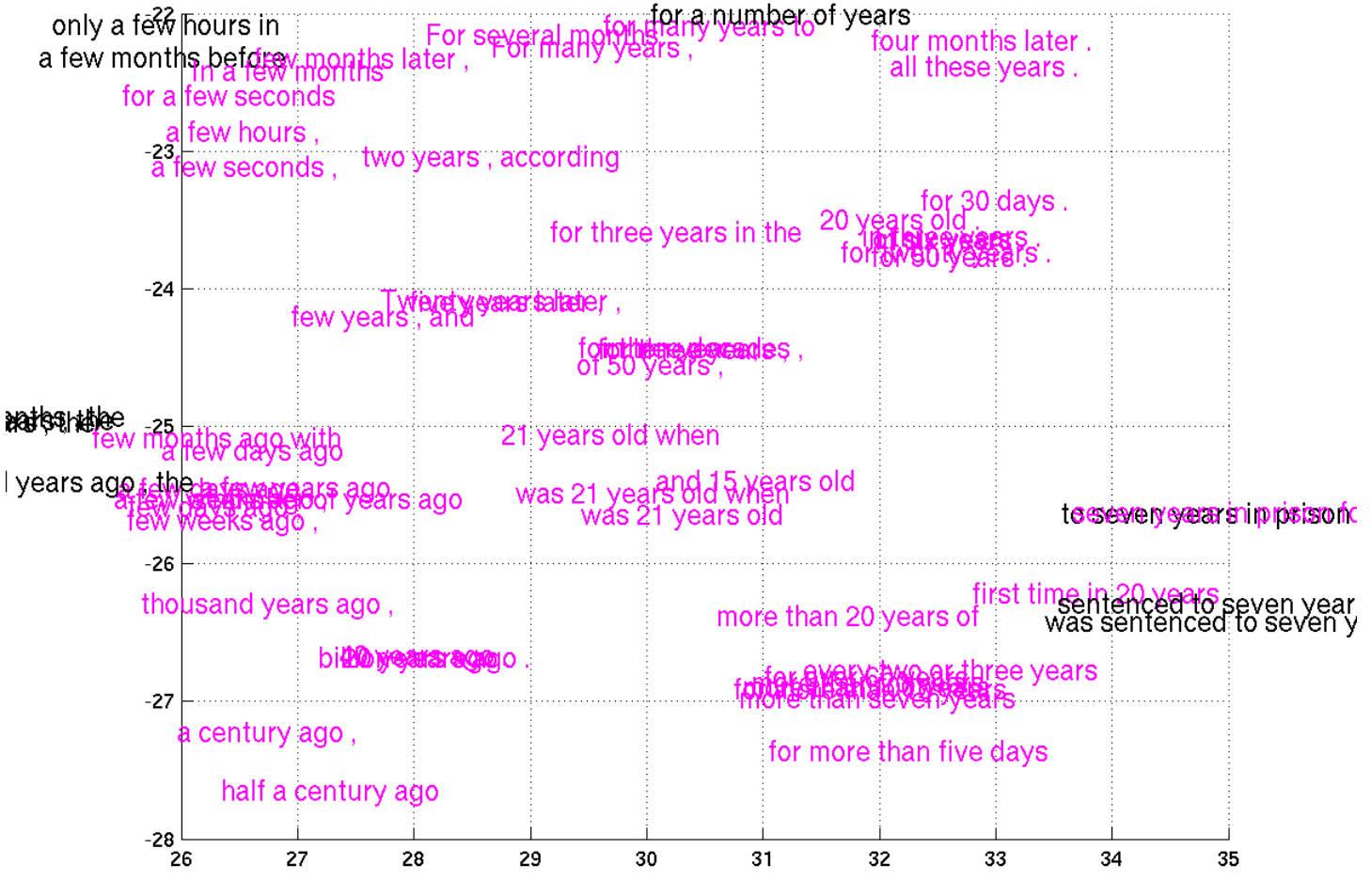

In order to see the structure of the space vector representations, we can represent them using the clever method of data visualization for high — dimensional tSNE.

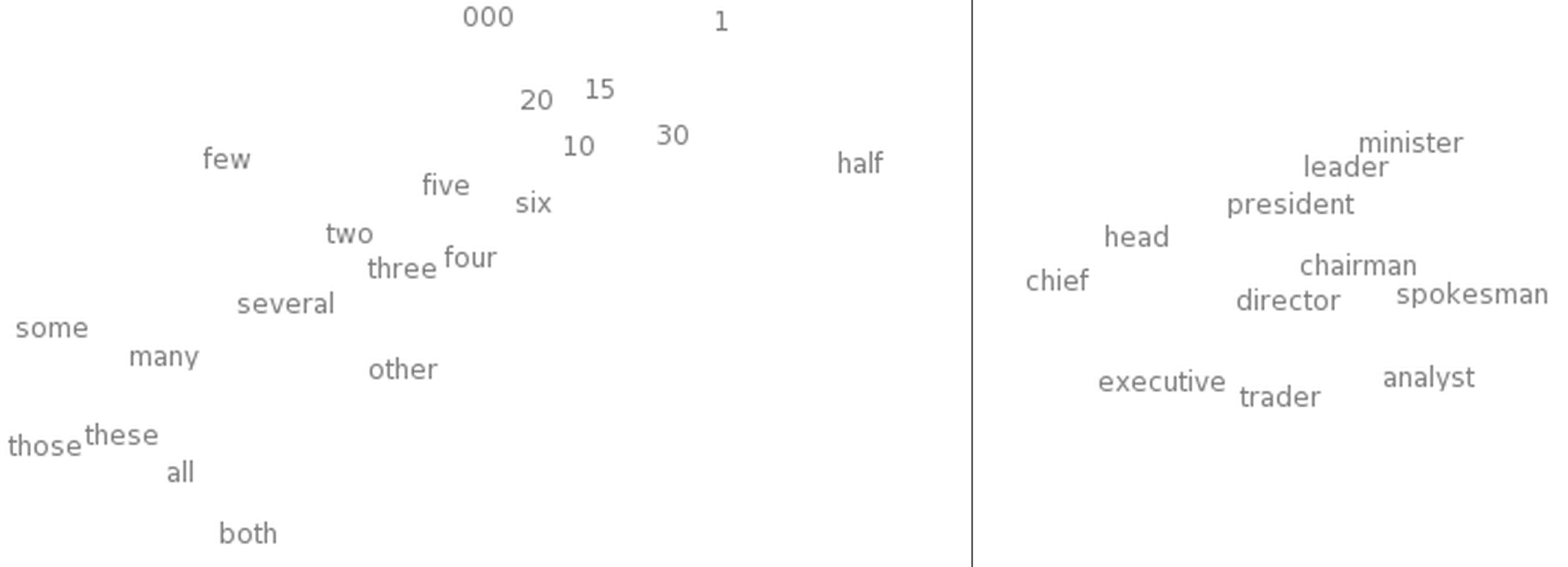

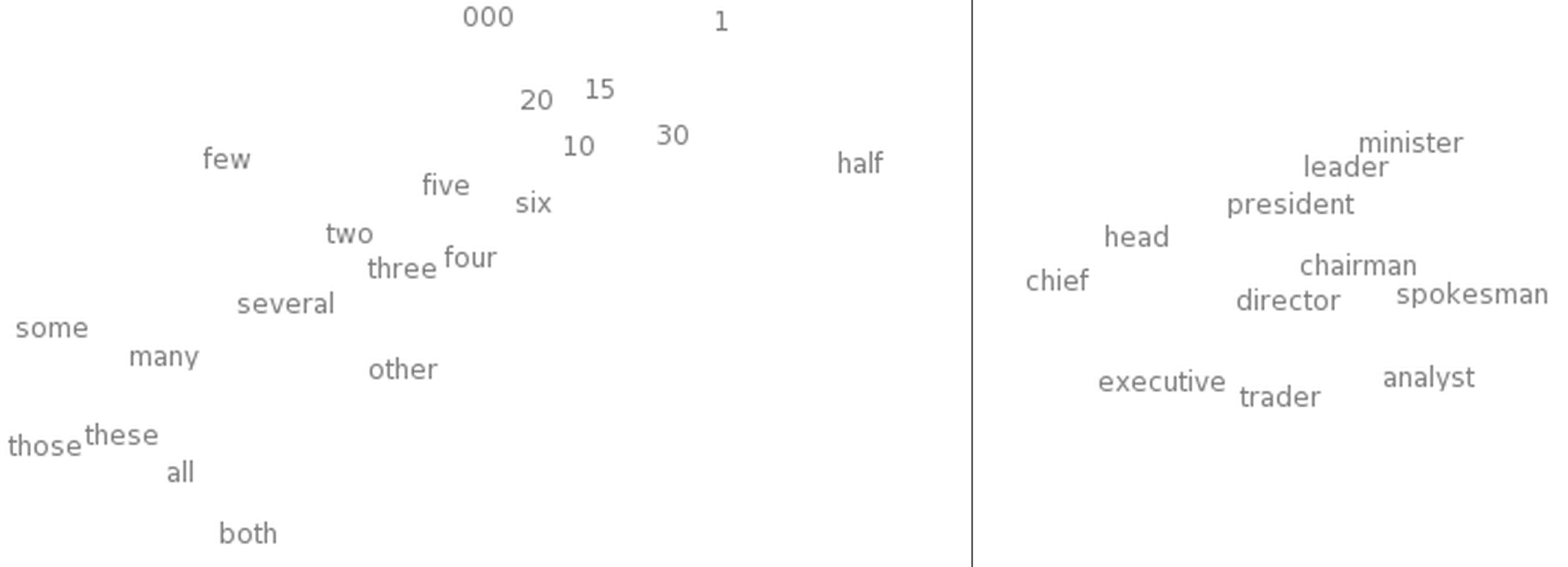

Visualization of vector representations of words using tSNE. On the left, the "area numbers" to the right "area jobs" (from Turian et al. (2010)).

This "map of words" seems quite meaningful. "Similar" words close, and to see what other views are closer to this, it appears that at the same time, and friends "like".

Whose vector representations are closest to the representation of the word? (Collobert et al. (2011).)

It seems natural that the network would correlate with similar values close to each other vectors. If you replace the word to a synonym ("some sing well" "few people sing well"), the "correctness" of the sentence does not change. It would seem, offers at the entrance differ significantly, but since W "shifts" represent synonyms ("some" and "few") to each other, for R, little has changed.

"few people sing well"), the "correctness" of the sentence does not change. It would seem, offers at the entrance differ significantly, but since W "shifts" represent synonyms ("some" and "few") to each other, for R, little has changed.

It is a powerful tool. The number of possible 5-grams is enormous, while the size of training samples is relatively small. The convergence of representations of similar words allows us, taking one sentence as if to work with the whole class "similar" to him. It is not limited to replacement of synonyms, for example, the possible substitution of words of the same class ("blue wall" "the wall is red"). Moreover, there is a sense in replacing several words ("wall of blue"

"the wall is red"). Moreover, there is a sense in replacing several words ("wall of blue"  "the ceiling is red"). The number of such "similar phrases" increases exponentially with the number of words.

"the ceiling is red"). The number of such "similar phrases" increases exponentially with the number of words.

Already in the fundamental work A Neural Probabilistic Language Model (Bengio et al. 2003) given a meaningful explanation of why a vector representation is such a powerful tool.

Obviously, this property of W would be very useful. But how can it teach? It is very likely that many times W faces a sentence of "wall of blue" and recognizes it as correct before you see the sentence "the red wall". Shift "red" is closer to "blue" improves network performance.

We still have to deal with examples of occurrences of each word, but analogy allows to generalize to new combinations of words. With all the words whose meaning we understand, we previously faced, but the meaning of the sentence can be understood, never haven't heard. The same can, and neural networks.

Mikolov et al. (2013a)

Vector representations have an much more remarkable property: it seems that relations of analogy between words are determined by the value of the vector difference between their perceptions. For example, apparently, the vector of the difference between "male-female" words — permanent:

Perhaps this did not much surprise. In the end, the presence of pronouns, having gender, suggests that the replacement of the word "kills" the grammatical correctness of a sentence. We write: "she is my aunt," but "he is uncle." Similarly, "he is the king" and "she — Queen." If we see the text "it is uncle", it is most likely a grammatical error. If half the cases, words are replaced randomly, then that must be the case.

"Of course!" — shall we say, looking back on past experience. — "Vector representations are able to represent gender. Surely there is a separate dimension for the floor. And as for the plural/singular. Yes, such relations and so easily recognized!"

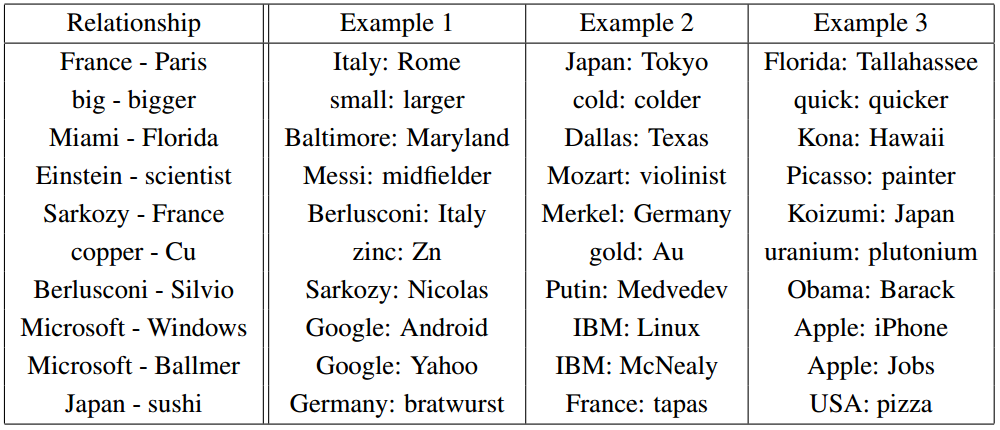

It turns out, however, that more complex relations "encoded" the same. Just wonders in a sieve (well, almost)!

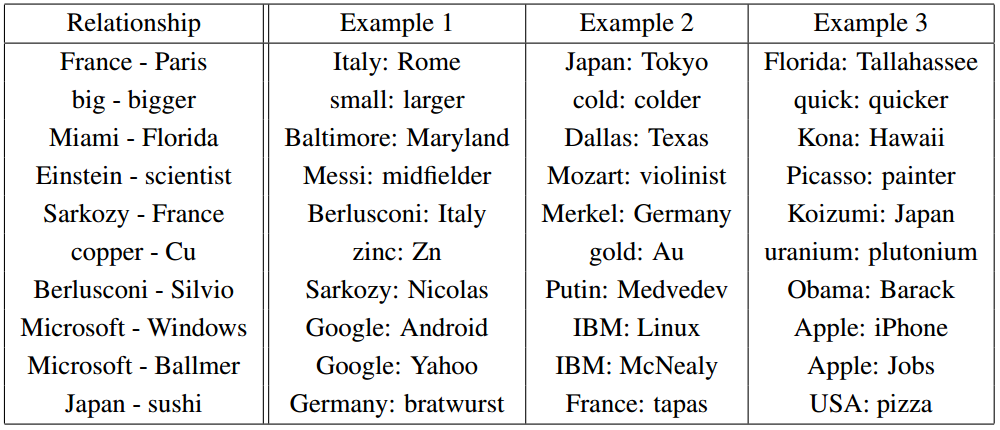

a Pair of relations (Mikolov et al. (2013b).)

It is important that all these properties are W side effects. We did not impose requirements that represent similar words should be close one to the other. We have not tried to adjust it a little analogy, using the difference vectors. We just tried to learn to check "correct" if the proposal and the properties come from somewhere themselves in the process of solving the optimization problem.

It seems the great power of neural networks lies in the fact that they are automatically learning to build the "best" representation of data. In turn, the data representation is an essential part of the solution to many machine learning tasks. A vector representation of words is one of the most amazing examples of learning ideas (learning a representation).

the

The properties of the vector representations, of course, curious, but can we use them to make something useful? Also silly things like checking "correct" if one or the other 5-gram.

W and F train, driving under a task A. Then G will be able to learn to solve the problem of B using W.

We trained vector representations of words to deal with simple tasks, but knowing their wonderful properties that we have already seen, it can be assumed that they are useful for more General problems. In fact, the vector representations like these are terribly important:

(Luong et al. (2013).)

The General strategy is to train a good representation for the problem A and use it to solve the problem B is one of the main tricks in the magic hat deep learning. In different cases it is called differently: predubezhdenie (pretraining), transfer learning, and multi-task learning (multi-task learning). One of the strengths of this approach — it allows you to teach submission to multiple types of data.

You can do this trick differently. Instead of setting up views for a single data type and use them for solving problems of different types, you can display various types of data in a single view!

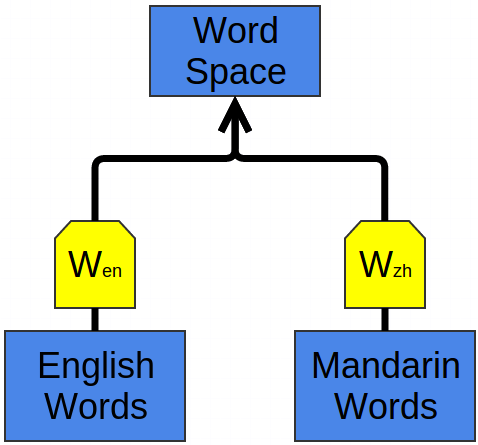

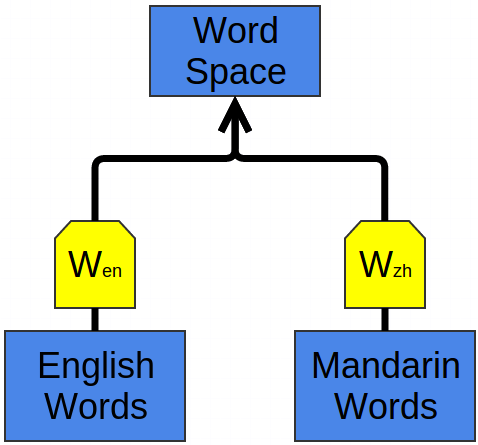

One of the great examples of this trick, vector representations of words for the two languages offered Socher et al. (2013a). We can learn to build words from two languages into a single space. In this work, "embedded" words from the English language and Putonghua ("Mandarin" Chinese).

We train two vector representations

the same way you did above. However, we know that some words in English and Chinese asysco have similar values. So, we optimize one criterion: representations of the known translations must be on small distance from each other.

the same way you did above. However, we know that some words in English and Chinese asysco have similar values. So, we optimize one criterion: representations of the known translations must be on small distance from each other.

Of course, in the end, we observe that known to us "similar" words are placed next to. No wonder we're all so streamlined. Much more interesting is this: the translation that we didn't also appear near.

Perhaps it is no longer surprising in light of our past experience with vector representations of words. They "attract" a similar word to each other, so if we know that English and Chinese words mean about the same thing, and submit their synonyms should be nearby. We also know that a couple of words in a relationship like the differences of births (sexes) differ by a constant vector. It seems that if you "pull" a sufficient number of translations, you will be able to customize and difference so that they were similar in the two languages. As a result, if the "male version" of the word in both languages translated into each other, will automatically receive, as the "female version" is also correctly translated.

Intuition tells me that must be, languages have a similar "structure" and that, forcibly associating them in the selected points, and we are bringing other views to the right place.

Vizuelizacija bilingual vector representations using t-SNE. Green marked China, Yellow — English (Socher et al. (2013a)).

When dealing with two languages, we train a single for two similar types of data representation. But can "fit" into a single space and a lot of different kinds of data.

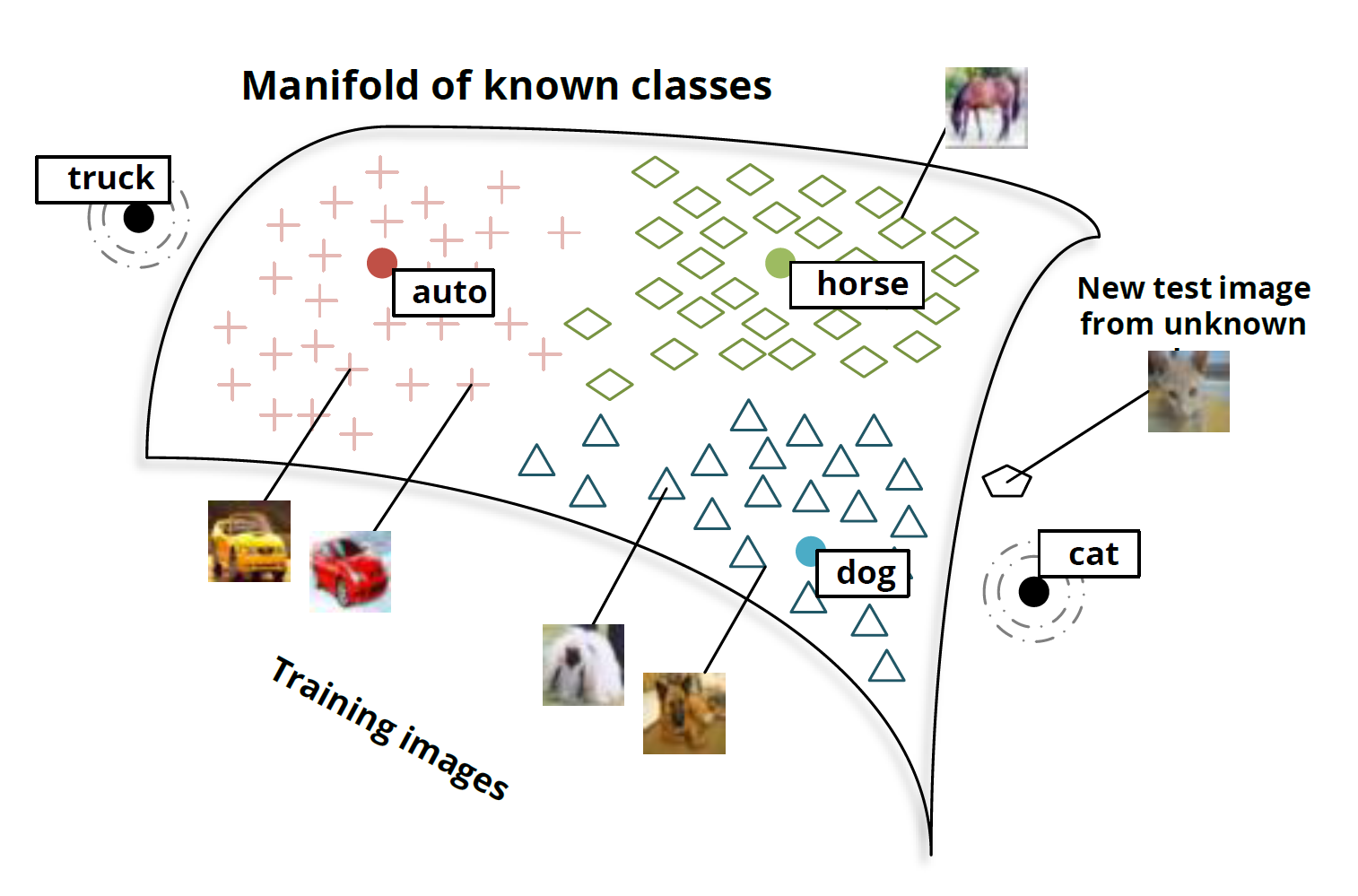

Recently, by using deep learning began to build models that "fit" the images and words into a single representation space

In the previous work modeled the joint distribution of tags and images, but things work differently here.

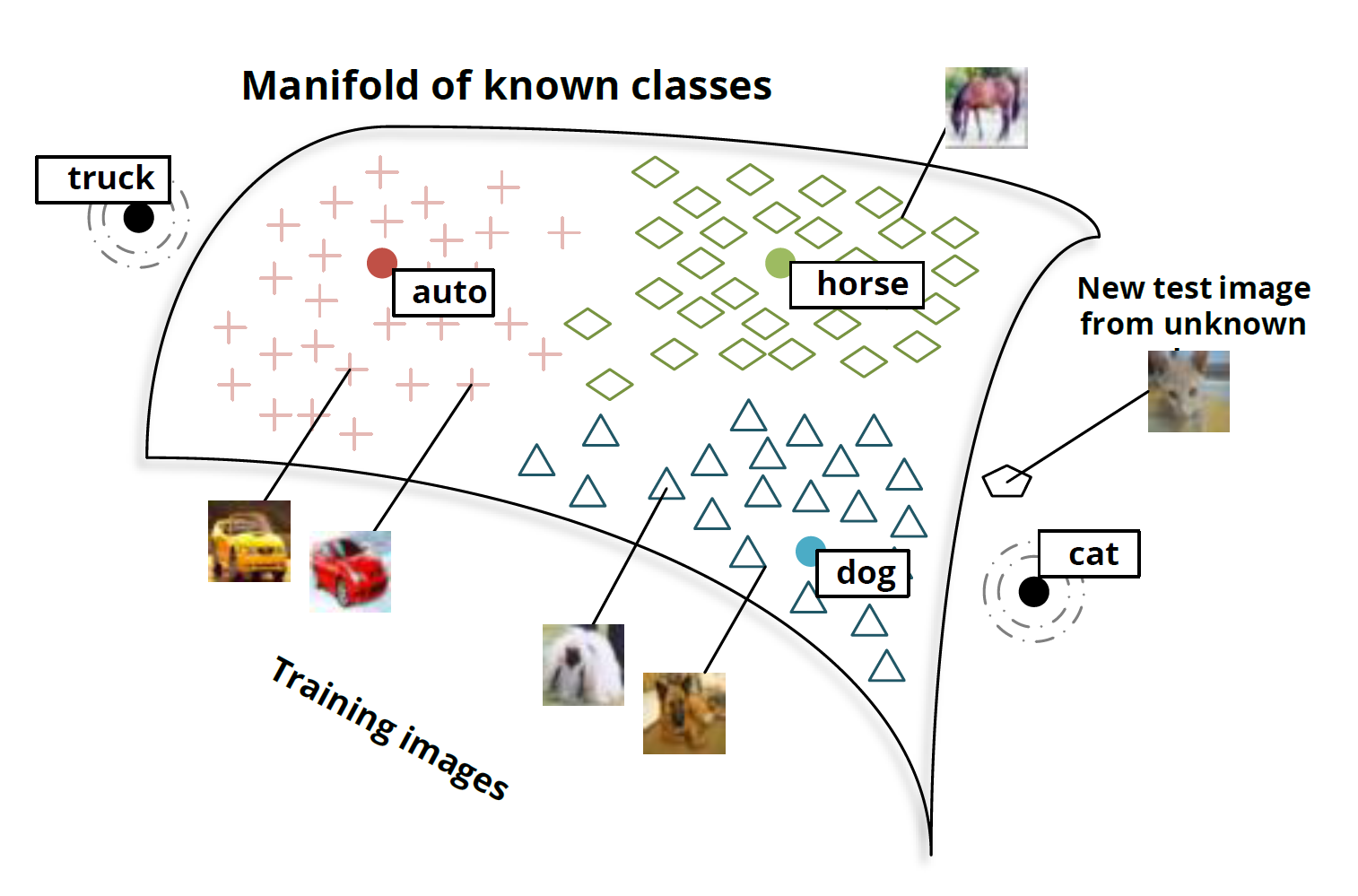

The basic idea is that we classify the image, giving a vector space representations of words. Images of dogs are displayed in the vectors about the view of the word "dog" with the horses — about horse, with a car — about car. And so on.

The most interesting thing happens when you check the model on new classes of images. So, what happens if you offer to categorize the image of the cat model, which is not taught specifically to recognize them, that is display in the vector close to the vector of "cat"?

Socher et al. (2013b)

It turns out that the network copes with new types of images. Images of cats are not displayed in random points in space. On the contrary, they are placed in the neighborhood of the vector for dog and quite close to the vector of "cat". Similarly, images of truck displayed at the point closest to the vector "truck", which is close to the bound vector "car".

Socher et al. (2013b)

The participants of the Stanford team did that to the 8 known classes and two unknowns. The results are already impressive. But with such a small number of classes, not enough points which can be interpolated the relation between images and semantic space.

Google research team built a much larger version of the same; they took 1000 categories instead of 8 — and at about the same time (Frome et al. (2013)), and then suggested another option (Norouzi et al. (2014)). Two recent works based on a strong model for classification of images (Krizehvsky et al. (2012)), but the images in them are placed in space vector representations of words in different ways.

And the results are impressive. If not possible to accurately compare images of unknown classes the right direction, at least manages to get in the right neighborhood. Therefore, if we try to classify images of unknown and differ significantly from other categories, the classes at least can be distinguished.

Even if I've never seen eskulapa snake or an Armadillo, when I show them pictures, I will be able to tell where someone is depicted, because I have a General idea of what the appearance may have this animals. And such networks can do it too.

(We often used the phrase "these words are similar". But it seems that it is possible to obtain much stronger results based on relationships between words. In our representation space of words is kept constant the difference between "male" and "female versions". But in the space of representations of images have reproducible properties that allow you to see the difference between the sexes. Beard, mustache and a bald head — a well-recognized signs of men. Breasts and long hair (at least reliable sign), makeup and jewelry — are obvious indicators of a female I understand that physical sex is deceptive. For example, I'm not going to say that all bald men, or that all who have bust women. But the fact that it is more often than not, will help us to set initial values.

. Even if you've never seen the king, seeing the Queen (which you identified on the crown) with a beard, you probably decide that it is necessary to use a "male version" of the word "Queen").

General idea (shared embeddings) is a breathtaking area of research; they are very persuasive argument in favor of that at the fronts of deep learning to promote teaching ideas.

the

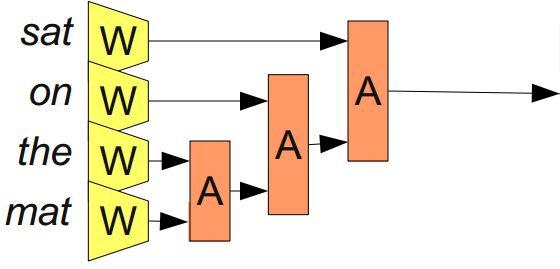

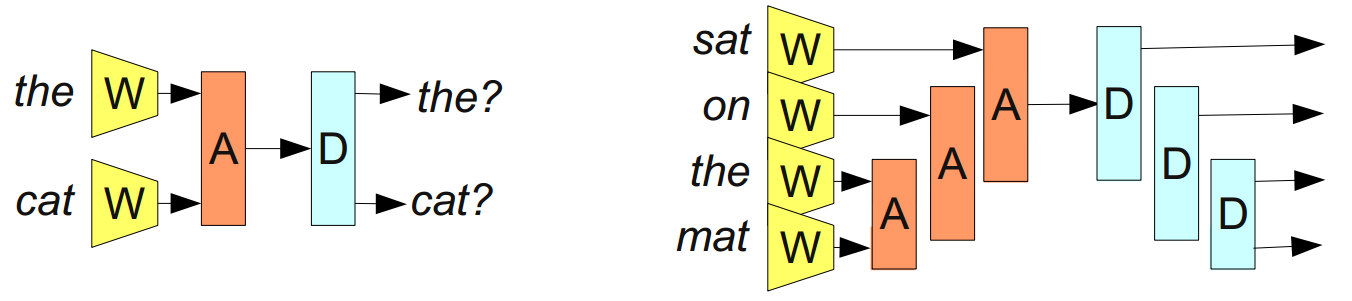

We began discussing vector representations of words with such a network:

a Modular network (Modular Network), a training vector representations of words (Bottou (2011)).

The diagram shows a modular network

It is built of two modules, W and R. This approach to the construction of neural networks of smaller "neural modules" — not too widely distributed. However, it is very well proved in the problems of natural language processing.

The model, which was rasskazano, strong, but they have one annoying limitation: the number of inputs they can not change.

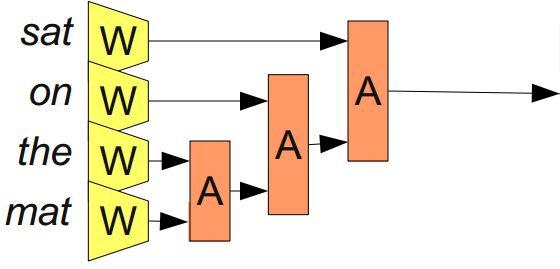

This can be overcome by adding the associating module A, which "merges" the two vector representations.

From Bottou (2011)

"Merging" of a sequence of words, A allows you to submit phrases and even whole sentences. And because we want to "merge" different number of words, number of inputs should not be limited.

Not the fact that the right to "merge" words in a sentence just in order. The sentence 'the cat sat on the mat' can be disassembled to pieces: '((the cat) (sat (on (the mat))'. Can apply A using such an arrangement of brackets:

From Bottou (2011)

These models are often referred to as recursive neural networks (recursive neural networks), since the output signal of one module is the input of another module of the same type. Sometimes they are also called neural network tree structure (tree-structured neural networks).

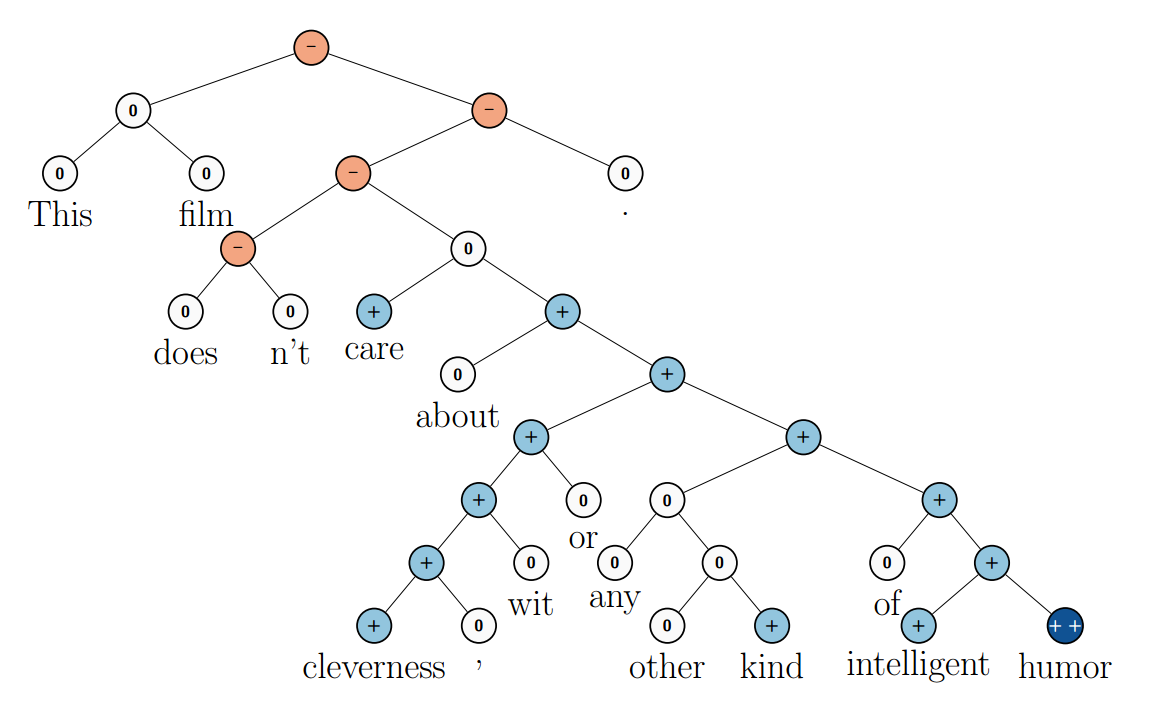

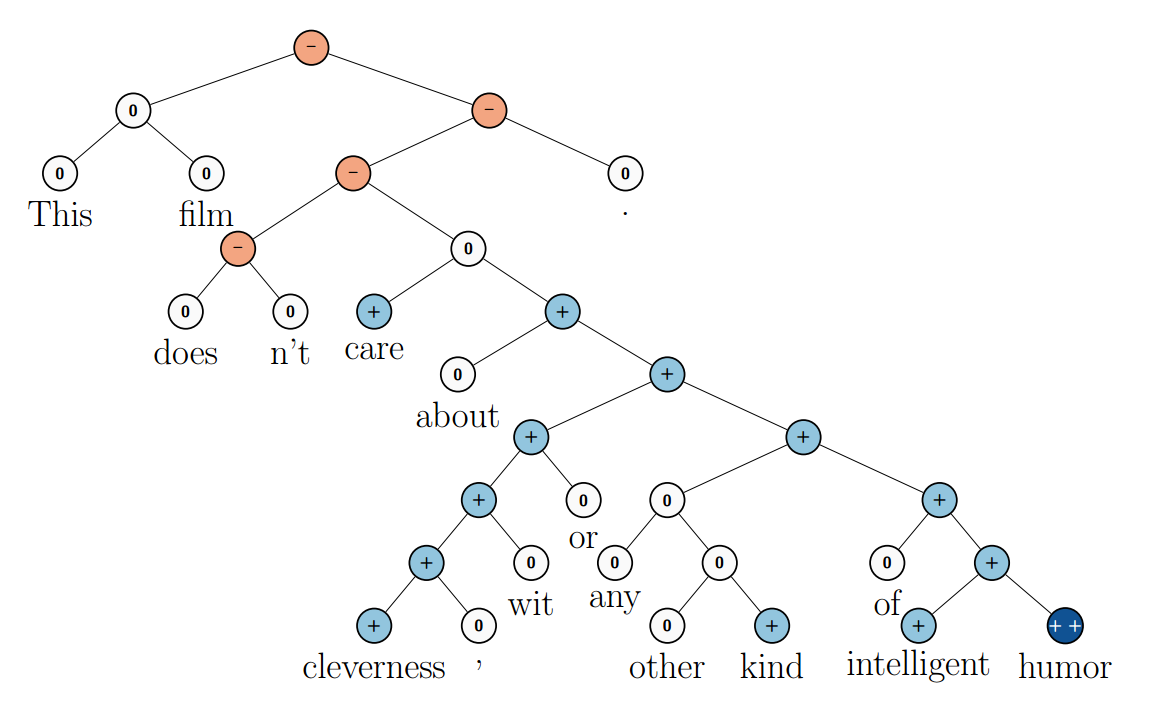

Recursive neural networks have achieved considerable success in the solution of several tasks of natural language processing. For example, in Socher et al. (2013c) they are used to predict the tone of the proposal:

(From Socher et al. (2013c).)

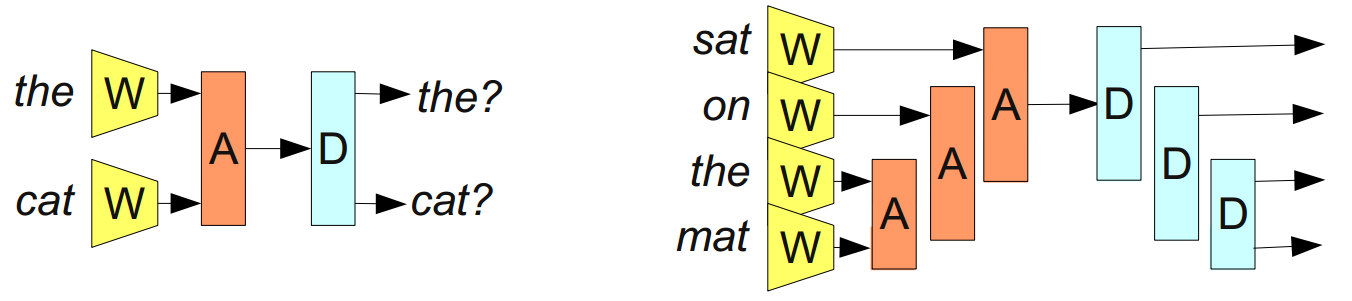

The main goal is to create a "reversible" the submission of a proposal, that is, such that it is possible to recover the sentence with approximately the same value. For example, you can try to enter the dissociating module is D that will perform the opposite of A:

From Bottou (2011)

If it succeeds, then we will have an incredibly powerful tool. For example, you can try to build the submission of proposals for the two languages and use it for automatic translation.

Unfortunately, it's proving quite difficult. It's awfully difficult. But, having reason to hope, on a beat.

Recently Cho et al. (2014) progress was made in the presentation of phrases, the model, which "encodes" the English phrase and "decodes" it as a phrase in French. Just look out for the submission!

a Small piece of space representations that are compressed by using tSNE (Cho et al. (2014).)

the

I heard that some of the results reviewed above criticized by researchers in other fields, in particular, linguists and specialists in natural language processing. Criticized results themselves, and the consequences that are derived from them, and methods of comparison with other approaches.

I don't think prepared well enough to articulate what exactly is the problem. Would be glad if someone did it in the comments.

the

Deep learning in the service learning representations is a powerful approach which seems to give the answer to the question why neural networks are so effective. In addition, it has amazing beauty: why neural networks are effective? Yes, because the best way to present data appear by themselves in the course of optimization of multilayer models.

Deep learning is a very young region, which has not been resolved of the theory and where views are changing rapidly. With this caveat, I will say that, I think, learning representations with neural networks is very popular.

In this post I talked about the many studies which seem to me impressive, but my main goal is to prepare the ground for the next post, which will highlight the connection between deep learning, theory of types and functional programming. If you're interested, that you do not miss it, you can subscribe to my RSS feed.

Next the author asks to inform about bugs or inaccuracies in the comments, see original article.

the

Thanks to Eliana Lorch, Yoshua Bengio, Michael Nielsen, Laura Ball, Rob Gilson and Jacob Steinhardt for their comments and support.

From the translator: I thank for helpful comments Denis Kiryanov Vadim Lebedev.

Article based on information from habrahabr.ru

In recent years, methods that use deep learning neural networks (deep neural networks), took a leading role in pattern recognition. Thanks to them, the bar for quality methods in computer vision have risen significantly. In the same direction moves and speech recognition.

Results results, but why they are so cool solve the problem?

The post illuminated some impressive results using the deep neural networks in natural language processing (Natural Language Processing; NLP). Thus I hope to explain one of the answers to the question of why deep neural networks work.

the

Neural network with one hidden layer

A neural network with a hidden layer is universal: for a sufficiently large number of hidden nodes, it can build an approximation of any function. This is often quoted (and often misunderstood and applied) theorem.

This is true because the hidden layer can be simply used as a "lookup table".

For simplicity, consider a perceptron. This is a very simple neuron that is triggered if the value exceeds the threshold value, and fails if not. The perceptron with binary inputs and binary outputs (i.e. 0 or 1). The number of variants in input value is limited. Each of them can be mapped to a neuron in the hidden layer, which only works for a given input.

Analysis of "conditions" for each individual entry will require

of hidden neurons (when n of data). In fact, usually it's not so bad. Can be "terms" that match multiple input values, and can be "overlapping each other" "terms" that reach the correct inputs on your crossing.

of hidden neurons (when n of data). In fact, usually it's not so bad. Can be "terms" that match multiple input values, and can be "overlapping each other" "terms" that reach the correct inputs on your crossing.Then can use a connection between this neuron and neurons at the output to set the final value for this particular case.

Versatility have not only the perceptrons. Network sigmoidal in neurons (and other functions of activation) is also universal: given sufficient number of hidden neurons, they can build arbitrarily accurate approximation of any continuous function. To demonstrate this much more difficult, as you cannot just take and isolate the inputs from each other.

Therefore, it appears that a neural network with one hidden layer is in fact universal. However, there is nothing impressive or amazing. The fact that the model can work as a cross-reference table — not the strongest argument in favor of neural networks. It simply means that the model is in principle able to cope with the task. Under the universality means only that the network can adapt to any sample, but this does not mean that it is able to adequately interpolate the solution to work with new data.

No, versatility still does not explain why neural networks work so well. The correct answer is somewhat deeper. To understand this, first consider a few concrete results.

the

Vector representations of words (word embeddings)

Begin with a particularly interesting sub-area deep learning — vector representations of words (word embeddings). In my opinion, now vector representation is one of the hottest research topics in deep learning, although they were first proposed by Bengio, et al. more than 10 years ago.

Vector representation was first proposed in the works of Bengio et al, 2001 and Bengio et al, 2003 a few years before the resurrection of deep learning in 2006, when the neural network was not yet in fashion. The idea of distributed representations and as such even older (see, for example, Hinton 1986).

In addition, I think that this is one of those tasks which are best formed an intuitive understanding of why deep learning is so effective.

Vector representation of the word

— a parameterized function that displays words from a natural language to vectors of large dimension (say, from 200 to 500 dimensions). For example, it might look like this:

— a parameterized function that displays words from a natural language to vectors of large dimension (say, from 200 to 500 dimensions). For example, it might look like this:

(Usually, this function is specified by a lookup table defined by the matrix

in which each word corresponds to the string

in which each word corresponds to the string  ).

).W is initialized with random vectors for each word. She will study to give meaningful values for the solution of a problem.

For example, we could train the network on the definition of "correct" or 5-grams (a sequence of five words, e.g. 'the cat sat on the mat'). 5-grams can be easily derived from Wikipedia, and then half of them "spoil", replacing each one of the words at random (for example, 'the cat sat the mat song'), as this almost always makes a 5-gram senseless.

a Modular network to determine "correct" or 5-grams (Bottou (2011)).

The model that we teach, skip every word of 5-grams using W to obtain a release of their vector representations, and submit them to the input of another module, R, which tries to predict the "correct" 5-gram or not. Want to make it:

To predict these values accurately, the network needs to choose a good settings for W and R.

However, this task is boring. Likely, the solution will help to find in the texts of grammatical errors or something in this spirit. But what's really valuable is obtained W.

(Actually, the whole point of the task in teaching W. We could consider solving other problems; for example, one of the most common next word prediction in a sentence. But that's not our goal. In the remainder of this section we will talk about many of the results of the vector representations of words and not be distracted by the lighting difference between the approaches).

In order to see the structure of the space vector representations, we can represent them using the clever method of data visualization for high — dimensional tSNE.

Visualization of vector representations of words using tSNE. On the left, the "area numbers" to the right "area jobs" (from Turian et al. (2010)).

This "map of words" seems quite meaningful. "Similar" words close, and to see what other views are closer to this, it appears that at the same time, and friends "like".

Whose vector representations are closest to the representation of the word? (Collobert et al. (2011).)

It seems natural that the network would correlate with similar values close to each other vectors. If you replace the word to a synonym ("some sing well"

"few people sing well"), the "correctness" of the sentence does not change. It would seem, offers at the entrance differ significantly, but since W "shifts" represent synonyms ("some" and "few") to each other, for R, little has changed.

"few people sing well"), the "correctness" of the sentence does not change. It would seem, offers at the entrance differ significantly, but since W "shifts" represent synonyms ("some" and "few") to each other, for R, little has changed.It is a powerful tool. The number of possible 5-grams is enormous, while the size of training samples is relatively small. The convergence of representations of similar words allows us, taking one sentence as if to work with the whole class "similar" to him. It is not limited to replacement of synonyms, for example, the possible substitution of words of the same class ("blue wall"

"the wall is red"). Moreover, there is a sense in replacing several words ("wall of blue"

"the wall is red"). Moreover, there is a sense in replacing several words ("wall of blue"  "the ceiling is red"). The number of such "similar phrases" increases exponentially with the number of words.

"the ceiling is red"). The number of such "similar phrases" increases exponentially with the number of words. Already in the fundamental work A Neural Probabilistic Language Model (Bengio et al. 2003) given a meaningful explanation of why a vector representation is such a powerful tool.

Obviously, this property of W would be very useful. But how can it teach? It is very likely that many times W faces a sentence of "wall of blue" and recognizes it as correct before you see the sentence "the red wall". Shift "red" is closer to "blue" improves network performance.

We still have to deal with examples of occurrences of each word, but analogy allows to generalize to new combinations of words. With all the words whose meaning we understand, we previously faced, but the meaning of the sentence can be understood, never haven't heard. The same can, and neural networks.

Mikolov et al. (2013a)

Vector representations have an much more remarkable property: it seems that relations of analogy between words are determined by the value of the vector difference between their perceptions. For example, apparently, the vector of the difference between "male-female" words — permanent:

Perhaps this did not much surprise. In the end, the presence of pronouns, having gender, suggests that the replacement of the word "kills" the grammatical correctness of a sentence. We write: "she is my aunt," but "he is uncle." Similarly, "he is the king" and "she — Queen." If we see the text "it is uncle", it is most likely a grammatical error. If half the cases, words are replaced randomly, then that must be the case.

"Of course!" — shall we say, looking back on past experience. — "Vector representations are able to represent gender. Surely there is a separate dimension for the floor. And as for the plural/singular. Yes, such relations and so easily recognized!"

It turns out, however, that more complex relations "encoded" the same. Just wonders in a sieve (well, almost)!

a Pair of relations (Mikolov et al. (2013b).)

It is important that all these properties are W side effects. We did not impose requirements that represent similar words should be close one to the other. We have not tried to adjust it a little analogy, using the difference vectors. We just tried to learn to check "correct" if the proposal and the properties come from somewhere themselves in the process of solving the optimization problem.

It seems the great power of neural networks lies in the fact that they are automatically learning to build the "best" representation of data. In turn, the data representation is an essential part of the solution to many machine learning tasks. A vector representation of words is one of the most amazing examples of learning ideas (learning a representation).

the

General idea (shared representations)

The properties of the vector representations, of course, curious, but can we use them to make something useful? Also silly things like checking "correct" if one or the other 5-gram.

W and F train, driving under a task A. Then G will be able to learn to solve the problem of B using W.

We trained vector representations of words to deal with simple tasks, but knowing their wonderful properties that we have already seen, it can be assumed that they are useful for more General problems. In fact, the vector representations like these are terribly important:

"the Use of vector representations of words... lately has become the main "secret" in many systems, natural language processing, solving in particular the problem of allocating of named entities (named entity recognition), part-of-speech tagging (part-of-speech tagging), parsing and semantic roles (semantic role labeling)".

(Luong et al. (2013).)

The General strategy is to train a good representation for the problem A and use it to solve the problem B is one of the main tricks in the magic hat deep learning. In different cases it is called differently: predubezhdenie (pretraining), transfer learning, and multi-task learning (multi-task learning). One of the strengths of this approach — it allows you to teach submission to multiple types of data.

You can do this trick differently. Instead of setting up views for a single data type and use them for solving problems of different types, you can display various types of data in a single view!

One of the great examples of this trick, vector representations of words for the two languages offered Socher et al. (2013a). We can learn to build words from two languages into a single space. In this work, "embedded" words from the English language and Putonghua ("Mandarin" Chinese).

We train two vector representations

the same way you did above. However, we know that some words in English and Chinese asysco have similar values. So, we optimize one criterion: representations of the known translations must be on small distance from each other.

the same way you did above. However, we know that some words in English and Chinese asysco have similar values. So, we optimize one criterion: representations of the known translations must be on small distance from each other.Of course, in the end, we observe that known to us "similar" words are placed next to. No wonder we're all so streamlined. Much more interesting is this: the translation that we didn't also appear near.

Perhaps it is no longer surprising in light of our past experience with vector representations of words. They "attract" a similar word to each other, so if we know that English and Chinese words mean about the same thing, and submit their synonyms should be nearby. We also know that a couple of words in a relationship like the differences of births (sexes) differ by a constant vector. It seems that if you "pull" a sufficient number of translations, you will be able to customize and difference so that they were similar in the two languages. As a result, if the "male version" of the word in both languages translated into each other, will automatically receive, as the "female version" is also correctly translated.

Intuition tells me that must be, languages have a similar "structure" and that, forcibly associating them in the selected points, and we are bringing other views to the right place.

Vizuelizacija bilingual vector representations using t-SNE. Green marked China, Yellow — English (Socher et al. (2013a)).

When dealing with two languages, we train a single for two similar types of data representation. But can "fit" into a single space and a lot of different kinds of data.

Recently, by using deep learning began to build models that "fit" the images and words into a single representation space

In the previous work modeled the joint distribution of tags and images, but things work differently here.

The basic idea is that we classify the image, giving a vector space representations of words. Images of dogs are displayed in the vectors about the view of the word "dog" with the horses — about horse, with a car — about car. And so on.

The most interesting thing happens when you check the model on new classes of images. So, what happens if you offer to categorize the image of the cat model, which is not taught specifically to recognize them, that is display in the vector close to the vector of "cat"?

Socher et al. (2013b)

It turns out that the network copes with new types of images. Images of cats are not displayed in random points in space. On the contrary, they are placed in the neighborhood of the vector for dog and quite close to the vector of "cat". Similarly, images of truck displayed at the point closest to the vector "truck", which is close to the bound vector "car".

Socher et al. (2013b)

The participants of the Stanford team did that to the 8 known classes and two unknowns. The results are already impressive. But with such a small number of classes, not enough points which can be interpolated the relation between images and semantic space.

Google research team built a much larger version of the same; they took 1000 categories instead of 8 — and at about the same time (Frome et al. (2013)), and then suggested another option (Norouzi et al. (2014)). Two recent works based on a strong model for classification of images (Krizehvsky et al. (2012)), but the images in them are placed in space vector representations of words in different ways.

And the results are impressive. If not possible to accurately compare images of unknown classes the right direction, at least manages to get in the right neighborhood. Therefore, if we try to classify images of unknown and differ significantly from other categories, the classes at least can be distinguished.

Even if I've never seen eskulapa snake or an Armadillo, when I show them pictures, I will be able to tell where someone is depicted, because I have a General idea of what the appearance may have this animals. And such networks can do it too.

(We often used the phrase "these words are similar". But it seems that it is possible to obtain much stronger results based on relationships between words. In our representation space of words is kept constant the difference between "male" and "female versions". But in the space of representations of images have reproducible properties that allow you to see the difference between the sexes. Beard, mustache and a bald head — a well-recognized signs of men. Breasts and long hair (at least reliable sign), makeup and jewelry — are obvious indicators of a female I understand that physical sex is deceptive. For example, I'm not going to say that all bald men, or that all who have bust women. But the fact that it is more often than not, will help us to set initial values.

. Even if you've never seen the king, seeing the Queen (which you identified on the crown) with a beard, you probably decide that it is necessary to use a "male version" of the word "Queen").

General idea (shared embeddings) is a breathtaking area of research; they are very persuasive argument in favor of that at the fronts of deep learning to promote teaching ideas.

the

Recursive neural networks

We began discussing vector representations of words with such a network:

a Modular network (Modular Network), a training vector representations of words (Bottou (2011)).

The diagram shows a modular network

It is built of two modules, W and R. This approach to the construction of neural networks of smaller "neural modules" — not too widely distributed. However, it is very well proved in the problems of natural language processing.

The model, which was rasskazano, strong, but they have one annoying limitation: the number of inputs they can not change.

This can be overcome by adding the associating module A, which "merges" the two vector representations.

From Bottou (2011)

"Merging" of a sequence of words, A allows you to submit phrases and even whole sentences. And because we want to "merge" different number of words, number of inputs should not be limited.

Not the fact that the right to "merge" words in a sentence just in order. The sentence 'the cat sat on the mat' can be disassembled to pieces: '((the cat) (sat (on (the mat))'. Can apply A using such an arrangement of brackets:

From Bottou (2011)

These models are often referred to as recursive neural networks (recursive neural networks), since the output signal of one module is the input of another module of the same type. Sometimes they are also called neural network tree structure (tree-structured neural networks).

Recursive neural networks have achieved considerable success in the solution of several tasks of natural language processing. For example, in Socher et al. (2013c) they are used to predict the tone of the proposal:

(From Socher et al. (2013c).)

The main goal is to create a "reversible" the submission of a proposal, that is, such that it is possible to recover the sentence with approximately the same value. For example, you can try to enter the dissociating module is D that will perform the opposite of A:

From Bottou (2011)

If it succeeds, then we will have an incredibly powerful tool. For example, you can try to build the submission of proposals for the two languages and use it for automatic translation.

Unfortunately, it's proving quite difficult. It's awfully difficult. But, having reason to hope, on a beat.

Recently Cho et al. (2014) progress was made in the presentation of phrases, the model, which "encodes" the English phrase and "decodes" it as a phrase in French. Just look out for the submission!

a Small piece of space representations that are compressed by using tSNE (Cho et al. (2014).)

the

Criticism

I heard that some of the results reviewed above criticized by researchers in other fields, in particular, linguists and specialists in natural language processing. Criticized results themselves, and the consequences that are derived from them, and methods of comparison with other approaches.

I don't think prepared well enough to articulate what exactly is the problem. Would be glad if someone did it in the comments.

the

Conclusion

Deep learning in the service learning representations is a powerful approach which seems to give the answer to the question why neural networks are so effective. In addition, it has amazing beauty: why neural networks are effective? Yes, because the best way to present data appear by themselves in the course of optimization of multilayer models.

Deep learning is a very young region, which has not been resolved of the theory and where views are changing rapidly. With this caveat, I will say that, I think, learning representations with neural networks is very popular.

In this post I talked about the many studies which seem to me impressive, but my main goal is to prepare the ground for the next post, which will highlight the connection between deep learning, theory of types and functional programming. If you're interested, that you do not miss it, you can subscribe to my RSS feed.

Next the author asks to inform about bugs or inaccuracies in the comments, see original article.

the

Acknowledgement

Thanks to Eliana Lorch, Yoshua Bengio, Michael Nielsen, Laura Ball, Rob Gilson and Jacob Steinhardt for their comments and support.

From the translator: I thank for helpful comments Denis Kiryanov Vadim Lebedev.